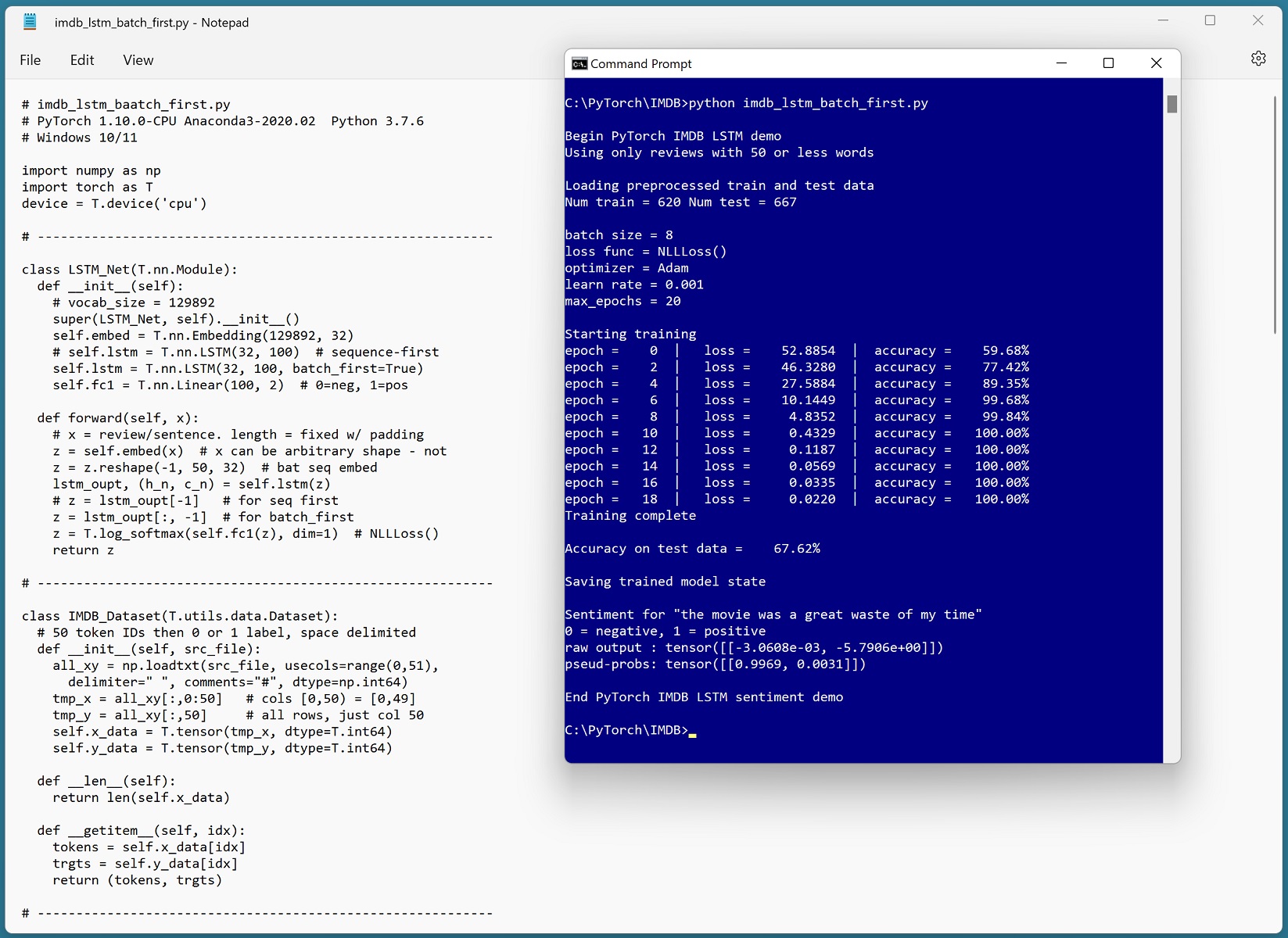

Taming LSTMs: Variable-sized mini-batches and why PyTorch is good for your health | by William Falcon | Towards Data Science

Do we need to set a fixed input sentence length when we use padding-packing with RNN? - nlp - PyTorch Forums

pytorch - Dynamic batching and padding batches for NLP in deep learning libraries - Data Science Stack Exchange